Long-Running Workflows

When you hear “workflow,” you’re likely to imagine processes or state machines: a collection of tasks to accomplish a goal like processing a loan, building out some infrastructure, or ordering some food. These Workflows can take a long time, but they generally have an end. But what if they didn’t?

There’s no rule or even guideline that determines whether a Workflow is long-running or short-running. But, the Workflows we’ll talk about here are distinguished by not having a definite end. Workflows in this pattern could run for less than a second or many years and in both cases, depending on the application, be considered “long-running.”

We often call this pattern “Entity Workflows.” This pattern is similar to an Actor Model, where a Workflow Execution represents one occurrence of some kind of entity, like a customer’s lifecycle through their purchasing journey, a customer’s cart, a product inventory, or a bank account.

This guide assumes that you have a basic working knowledge of Temporal, including Workflows, Activities, Signals, and Queries. Reading this guide will help you understand many of the situations and Temporal features you may encounter while building your own long-running Workflows.

Prerequisite Terminology

The following additional terms and concepts will be helpful in understanding the remainder of this guide:

Entity Workflow

Though not an SDK primitive or core Temporal concept, we’ll use the term “Entity Workflow” in this guide to refer to Workflows that are used to represent something (an “entity”) that potentially lives forever. Examples include customers, an inventory of items, an account, and more. These contrast with Workflows that are used for processes with a definite end, like placing a food order or waiting for a Timer to finish.

Long-Running

There’s no rule that determines if a given Workflow is long or short-running. There’s no “if runtime > N seconds” threshold a Workflow must pass for it to be dubbed long-running; it could run for fractions of a second or many years, depending on your application. Here, we’ll define “long-running” as Workflows that run for an indefinite amount of time—that is, you don’t know in advance when they’ll complete. For example, if you have a Workflow that maintains a customer’s loyalty points, you don’t know until the customer decides to close their account that you’ll be completing the Workflow Execution representing their account.

Event and Event History

Temporal ensures the durability of Workflows by recording the state of a Workflow throughout its life. These state changes are dubbed ”Events” and the log ”Event History.” By receiving and “replaying” a Workflow’s Event History, a Worker is able to resume executing the Workflow at the appropriate place with the appropriate state.

The Event History has a hard limit of 50K (51,200) Events or 50MB in total size. To continue a Workflow past these limits, Temporal has Continue-As-New.

Continue-As-New

Continue-As-New is a feature allows you to keep a Workflow running but with a new Run ID and a new Event History. Continue-As-New completes the current Workflow instance and atomically starts a new one with the same Workflow ID without having to worry about race conditions from manually closing and restarting a Workflow Execution.

Note: Some of the Temporal SDK’s APIs for Continue-As-New are defined or implemented as some kind of “error” (for example, in Go, you would create a NewContinueAsNewError). Despite being named as such, it’s not really an “error,” in the commonly understood sense. Rather, it’s an artifact of how these SDKs handle a Workflow Execution’s completion for Continue-As-New versus successful completion.

Additional Background on Entity Workflows

There are two main types of “long-running Workflows” that we’ll discuss here:

- Some kind of process that takes a long time (see the definition of “long-running” above)

- A Workflow that represents something (see the definition of “Entity Workflow” above)

The difference is in concept only: both could run quickly or forever, both may need to interact with systems outside the Workflow, and both might do so much work as to require Temporal’s Continue-As-New.

For example, these two Workflows could have very similar implementations:

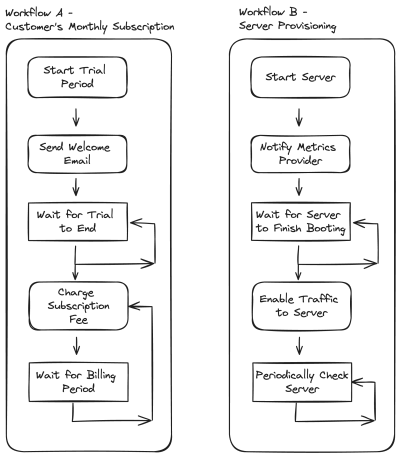

The Workflow on the left (A) represents a customer’s subscription to a service, with periodic billing. The Workflow on the right (B) represents a server provisioning process. Both start something they must wait for – (A) a time period to pass; (B) a server to finish booting – then send a message to an external system, and then periodically take a related action – (A) charge the customer the subscription fee; (B) enable traffic to the server.

While distinctly different from each other, these two Workflows must both:

- Sleep/wait for a potentially very long time, possibly running Activities concurrently with a Timer

- (checking if the customer’s information has changed, or checking if the server is up yet)

- Indefinitely repeat an Activity, waiting for some time between invocations

- (billing the customer; validating server is still up)

- Canceling that loop based on some external condition

- (customer has cancelled; server is down)

Similarly, they both have similar refinements and edge cases. Both also need to:

- Handle Signals or Updates to break the loop and end the Workflow or update internal state

- Handle Queries to report status to the outside world

- Track the Workflow Execution’s Event History to not exhaust Temporal’s limits

- Make code fixes or updates while there are actively running Workflows

To simplify, we’ll cover these in two categories:

- How to keep a Workflow Execution running indefinitely

- How to interact with these Workflow Executions

The last point, applying updates to Workflow code, is covered in a separate guide on Workflow Versioning.

This guide also does not cover long-running Activities. For that, consult the documentation on Activity Heartbeats.

How to Keep a Workflow Running Indefinitely Long

There are two limits in Temporal that keep a single Workflow execution from actually running forever: 50Ki (51,200) Events in the Event History and an Event History size of 50MB. If either limit is reached, the Workflow is Terminated with an error of, “Workflow history size / count exceeds limit.”

These limits are in place to address how much work a Worker needs to do when it encounters a Workflow it doesn’t know anything about. This happens when either the Workflow Execution doesn’t exist in cache (either it never was there, or it was evicted) or the Worker crashed and restarted. When a Worker is looking to resume running a Workflow, it needs to replay the prior history to resume at the right point. Let’s consider an example.

Imagine we have designed a Workflow to run infinitely long and that this Workflow does a fair amount of work: say, a Workflow that acts as a proxy between a temperature sensor floating in the ocean and the rest of the internet. This Workflow must be able to handle a large number of Signals and Queries—in order to push updates or get the latest data, respectively—and a large number of Activities—to actually make the network call to retrieve the real data from the sensor in question.

Each of these Signals and Activities (among other things, like their inputs and outputs) adds to the Workflow’s Event History. Doing so means that, in the scenario where a Worker crashes and subsequently restarts, it can “replay” the history to be able to resume from exactly where it left off with exactly the state it previously had.

Replaying that history takes time. When small, it’s usually an acceptable delay. On the opposite end, if the Event History were very large, replaying it to get back to doing real work could take so long as to cause unacceptable delays in a Workflow Execution making forward progress.

Continue-As-New

The situation described above is why Continue-As-New exists. It allows a Workflow to clear its Event History and start anew, but have the same Workflow ID. Continue-As-New is like a stackless recursion: call the same function with different parameters to keep processing data, but without the baggage of a call stack risking a stack overflow.

There are a few considerations when incorporating Continue-As-New into your Workflow:

-

Since “continuing as new” means there’s an empty Event History, your Workflow function is starting from a completely clean state. Its inputs and initialization need to be designed such that your implementation can distinguish (or won’t need to distinguish) between a continuation of a previous Workflow instance versus a brand-new one.

For example, in a monthly billing or subscription Workflow, the pseudocode for handling this might look like this:

func BillingSubscription(subscription) -> error: if subscription.trialExpirationDatetime > workflow.now(): workflow.sleep(subscription.trialExpirationDatetime - workflow.now()) while not subscription.canceled: workflow.sleep(subscription.nextBillingCycleDatetime - workflow.now()) ChargeCustomer(subscription.customerInfo) if workflow.eventHistoryLength > 25K: return workflow.continueAsNew(BillingSubscription, subscription) return nullThat is, the inputs to the function — in this example, the options within the

subscriptionobject–are designed such that it doesn’t matter whether it’s called for a new subscription or the continuation of an existing one.Note that the exact SDK calls into

workflowwill differ for each language. For example, the aboveworkflow.continueAsNewcall in Go would bereturn workflow.NewContinueAsNewError(ctx, BillingSubscription, subscription)whereas in Python it would be

workflow.continue_as_new(subscription) -

Because Continue-As-New starts a new run of the current Workflow, you will lose any pending Signals when doing Continue-As-New unless you drain and/or process them first. Because handling Signals also generates Events (see below), you will need to drain any outstanding Signals before calling Continue-As-New: waiting until the Event History length is extremely close to 50K could result in the Events created by handling pending Signals leading to a Workflow Termination. The Temporal Server emits warnings after every 10K Events; if you see those warnings, it’s a good time to Continue-As-New.

Using the Go SDK, Signal draining might look like this:

// Drain signal channel asynchronously to avoid signal loss for { var signalVal string ok := signalChan.ReceiveAsync(&signalVal) if !ok { break; } workflow.GetLogger(ctx).Info("Received signal!", "signal", signalName, "value", signalVal) // PROCESS SIGNAL } return workflow.NewContinueAsNewError(....)That is, receive and process any and all pending Signals until none are remaining. Since this snippet immediately calls Continue-As-New when there are no more Signals, any future Signals that come in will be handled by the new instance of this Workflow.

Also be aware that it’s possible for Signals to come in fast enough that your Signal draining is never able to finish. There must be a period of quiet time to allow the Continue-as-New to happen without Signal loss.

-

Similarly, because Continue-As-New results in a new execution, any pending Activities are lost and sent a cancellation if they are not awaited. This can happen in cases where your Workflow asynchronously starts Activities, but does not await them or otherwise block on their results. Consider the following Go example:

// error handling removed for brevity func Workflow(ctx Context) error { _ = workflow.ExecuteActivity(ctx, Activity) return workflow.NewContinueAsNewError(ctx, Workflow) }In this example, an Activity is started but the Workflow does not block on its result, instead immediately Continuing-As-New. Besides resulting in an infinite loop of new Workflow Executions, the results of the Activity are never able to be retrieved.

Note that in this example, the Worker only ever gets a single Task from the server; since these Tasks (Workflow Tasks specifically) are what include Events such as ActivityTaskScheduled to inform the Worker to run Activities, the Activity in the above code snippet will never even run, let alone finish. In some cases, depending on what your Workflow does (for example sleeping between ExecuteActivity calls thus yielding to the Temporal Server and allowing new Activity Tasks to be picked up by the Worker) these Activities may still run even though the results are never retrieved.

-

Similarly, since Continue-As-New closes the current Workflow Execution, it affects how Child Workflows behave. By default, all Child Workflows will be terminated when the Parent closes (including with Continue-As-New). To override this — for example, to allow Child Workflows to continue as if nothing happened — set a ParentClosePolicy. This example snippet in Go will allow the Child to continue running after the Parent calls Continue-As-New:

cwo := workflow.ChildWorkflowOptions{ // ... ParentClosePolicy: enums.PARENT_CLOSE_POLICY_ABANDON, } ctx = workflow.WithChildOptions(ctx, cwo) childWorkflowFuture := workflow.ExecuteChildWorkflow(ctx, ChildWorkflow)Note that as a result of this, even though the Child Workflow will continue to run, the direct parent-child relationship is broken. However, since with Continue-As-New there will still be an instance of the Parent, the Child and Parent can still interact with each other via the usual Signal and Query operations (see below for discussion on Run IDs and Workflow IDs),

-

Since Continue-As-New is necessary when a Workflow’s Event History risks getting near the limits, deciding when to call Continue-As-New depends on how your Workflow generates Events. Principally (a) how many and how often Events get added to the Event History (e.g., through Activity executions or Timers); and (b) how much data is included with each Event (e.g., what are your Activities returning). Recall that the Temporal Server will terminate your Workflow if its Event History goes beyond 50k Events or 50MB in size.

Note that in many cases you can determine if you should Continue-as-New by using the

GetContinueAsNewSuggestedmethod on a Workflow’s Info. For example,info.GetContinueAsNewSuggested()in Go, orinfo.is_continue_as_new_suggested()in Python (similar methods exist in every other SDK as well). This call is only supported with Temporal Server versions starting with version 1.20.

Should you need to estimate for yourself, here are some heuristics for measuring how close your Workflow is to hitting the History limits and, therefore, determining when to call Continue-As-New:

Length of Event History

- A Workflow with no Activities, Timers, Markers, or other event-generating operations will generate five events: one for Workflow started, one for completed, and three for a single Workflow task being scheduled, started, and completed.

- A Workflow with only a single Activity execution will generate an Event History of length 11. The Activity itself results in three Events (Activity Scheduled, Started, and Completed), there are Workflow Tasks before and after the Activity which result in six Events (Workflow Scheduled, Started, and Completed), and every Workflow has a WorkflowExecutionStarted and WorkflowExecutionCompleted at the beginning and end respectively.

- A Workflow with a single Activity in a loop will generate an Event History of length

5 + 6 * i(whereiis the number of loop iterations). That is, each Activity effectively results in six Events. - A Workflow with a single Timer and nothing else (e.g., only a workflow.Sleep or workflow.Timer) will generate 10 events. Timers themselves generate two Events, TimerStarted and TimerFired. When the Workflow waits for the time to expire, it yields back to the Server, generating three more Events for the subsequent Workflow Task after the timer fires in addition to the three Events corresponding to the Workflow Task that started the Timer.

- A Signal itself only generates a single Event and in normal situations, it also generates a Workflow Task for it to be handled. However, whether there are Workflow Tasks associated with it depends on the nature of the Signal. Consider a Workflow that starts and then immediately blocks waiting for a Signal (and then completes successfully once a Signal is received). There are two scenarios in which this Workflow could run:

- Signal-With-Start will include the Signal information as part of the Workflow’s starting and will trigger the signal handler immediately. The result is a Workflow with 6 Events.

- If the Workflow progresses as far as it can (therefore allowing the Worker to move on to other Workflows or just waiting for more Tasks), for example by blocking on waiting for incoming Signals, then for the Signal to be handled a new Workflow Task needs to be issued to the Worker, resulting in three additional Events. This Workflow — one that does nothing but blocks until a single Signal is received — completes with 9 Events.

Size of Event History

- The primary determinate factor for Event History size is your Activities’ parameters and returns and a Signal or Update’s input arguments. All inputs and returns of Activities are recorded in the Event History. For example, if you have an Activity that takes no parameters but returns 500KB of data (measured in its serialized form), you’ll be able to run well under 100 of these Activities, as the history size limit is 50MB. (Since there are other Events and metadata on Events that contribute to the History size, you won’t be able to run exactly 100 of them.)

- If your Workflow’s Activities need to be able to take in or return a lot of data (defined as more than 1-2MB), consider either compressing via a Data Converter or storing the actual data in a blob store like AWS S3 and instead passing the URL of the uploaded blob.

- For performance-sensitive applications, it may also be worth caching data on Worker hosts and routing Activities that need to use the data to the same host.

-

Since Temporal only allows one actively running (“Open”) Workflow instance per Workflow ID the Run ID exists to distinguish between invocations. One of the things Continue-As-New does for you is to atomically create a new run of the same Workflow ID. (If you did this on your own, without Continue-As-New — that is, complete the Workflow and then start another — there would be a time gap between the two runs, thereby risking Signal loss.)

The side effect of this is to consider how you interact with this Workflow. Signals and Queries, for example, need to be able to identify a specific instance of the Workflow. The default behavior for the Temporal SDKs is that when a Run ID is not given, the currently running (or most recent, in the case of Queries) instance is used.

As an example, consider the function signature for the Go SDK’s

client.SignalWorkflow:SignalWorkflow(ctx context.Context, workflowID string, runID string, signalName string, arg interface{}) errorWith

runIDas a “required” parameter, it may be tempting to conclude that you need to retrieve and specify a Run ID for every Signal sent. And if that’s the case, why not cache that Run ID to avoid the query time cost for updating it? (In fact, Run IDs aren’t deterministic and so you should never cache them.)As per the above, the problem with this is that Continue-As-New closes the current Workflow Execution and starts a new one with a new Run ID—and, signaling a closed Workflow results in a “workflow execution already completed” error.

Instead, SignalWorkflow allows an empty Run ID. In this case, the Workflow Signaled will be the currently open execution of the given Workflow ID.

The other SDKs have similar behavior. For example, in Python, if you call a method like

signalordescribeon a Workflow Handle that doesn’t have a Run ID, it will go to the most recent Run.

How to Interact with Indefinitely Long-Running Workflows

Aside from the above considerations for Continue-As-New, interacting with a long-running Workflow is not meaningfully different from interacting with any other Temporal Workflow. That said, here are a few things to keep in mind when interacting with such Workflows:

-

Signals are your way to get application-specific data into a Workflow. Signals can update Workflow state and so,

- They can reliably alter the Workflow’s behavior as necessary (e.g., to update the credit card information for a monthly billing subscription).

- They can start Activity executions (e.g., in a sensor proxy use case, to call out to the sensor to update its data collection rates).

- They can only be sent to Open (running) Workflows. See above for discussion on Run IDs vs Workflow IDs. (An alternative to Signal is Signal-With-Start, which will start a Workflow if it’s not already running.)

Note that Signals in rare cases may be duplicated. Though unlikely, if having a repeat Signal is a problem for your application, be sure to make your Signal handler idempotent (e.g., through the use of idempotency keys).

You can read more about Signals and learn how to use them in our Documentation.

-

Queries are the opposite of Signals: they allow you to get application-specific data from _a Workflow. They do not modify Workflow state and do not get added to the Event History. As such, 4. They will return the current state within the Workflow. While this can be any application-specific information, it cannot result in a _change in state. 5. They will return that state even if the Workflow is Closed.

You can read more about Queries and learn how to use them in our Documentation.

-

Updates are a way to combine a Signal (sending data into a Workflow) and a blocking Query (waiting for data to come back from a Workflow) into a single operation. Updates can both update Workflow state and receive data back, and so, 6. They can be validated before being handled. This allows you to determine if an Update request is valid (e.g., deny the Update request if it wants to change the Workflow into an erroneous state). 7. They can run handler code, similar to a Signal. And, just like a Signal or any other Workflow code, the Update handler must be deterministic, but can otherwise start Activities, run Child Workflows, or use any other Workflow features.

You can read more about Updates and learn how to use them in our Documentation.

Conclusion

This guide outlined the primary considerations when designing Temporal Workflows to run for an indefinitely long time. Temporal allows you to create Workflows that can trivially last a long time — a Workflow sleeping and then waking up once a year to increment a counter will reliably run. But to get a Workflow that can truly run forever while accomplishing “real work” — as in examples like monthly billing subscriptions or sensor proxies — requires a bit of extra consideration. Principally:

- To avoid having your Workers stuck in an endless process of reading and Replaying long Event Histories, you’ll need to “Continue-As-New” these Workflows to reset the Event History. Note that this not only applies to long-running Workflows, but also to Workflows containing many Activities or Activities with large inputs and returns.

- Interacting with such Workflows from outside the Workflow is really no different from interacting with other Workflows, except for how you identify them: Continue-As-New creates a new Run ID for the Workflow instance. This means that when you use APIs like Signal and Query that optionally take a Run ID, you need to take care to either not specify the Run ID (thus automatically using the latest run) or otherwise ensure that you’re using the correct one.

While the practices described in this guide apply generally to long-running Workflows, they’re particularly well suited for applications implementing patterns like the Actor Model: Workflow instances that represent an “Actor” or “Entity” will typically need to run for a very long time and handle an innumerable amount of incoming messages.